Staff Engineers Expand Influence, PR Review Pitfalls, The "Done" Myth, AI Coding Impact

Issue #66 Bytes

🌱 Dive into Learning-Rich Sundays with groCTO ⤵️

Article of the Week ⭐

“I raised my hand, and he said, “Oh, here we go again. What is it next, Jordan? Are you wondering why the sky is blue?” At the time, I felt a bit embarrassed, but now I realize: curiosity is a superpower.“

Operating Principles That Guided Me to Staff Engineer

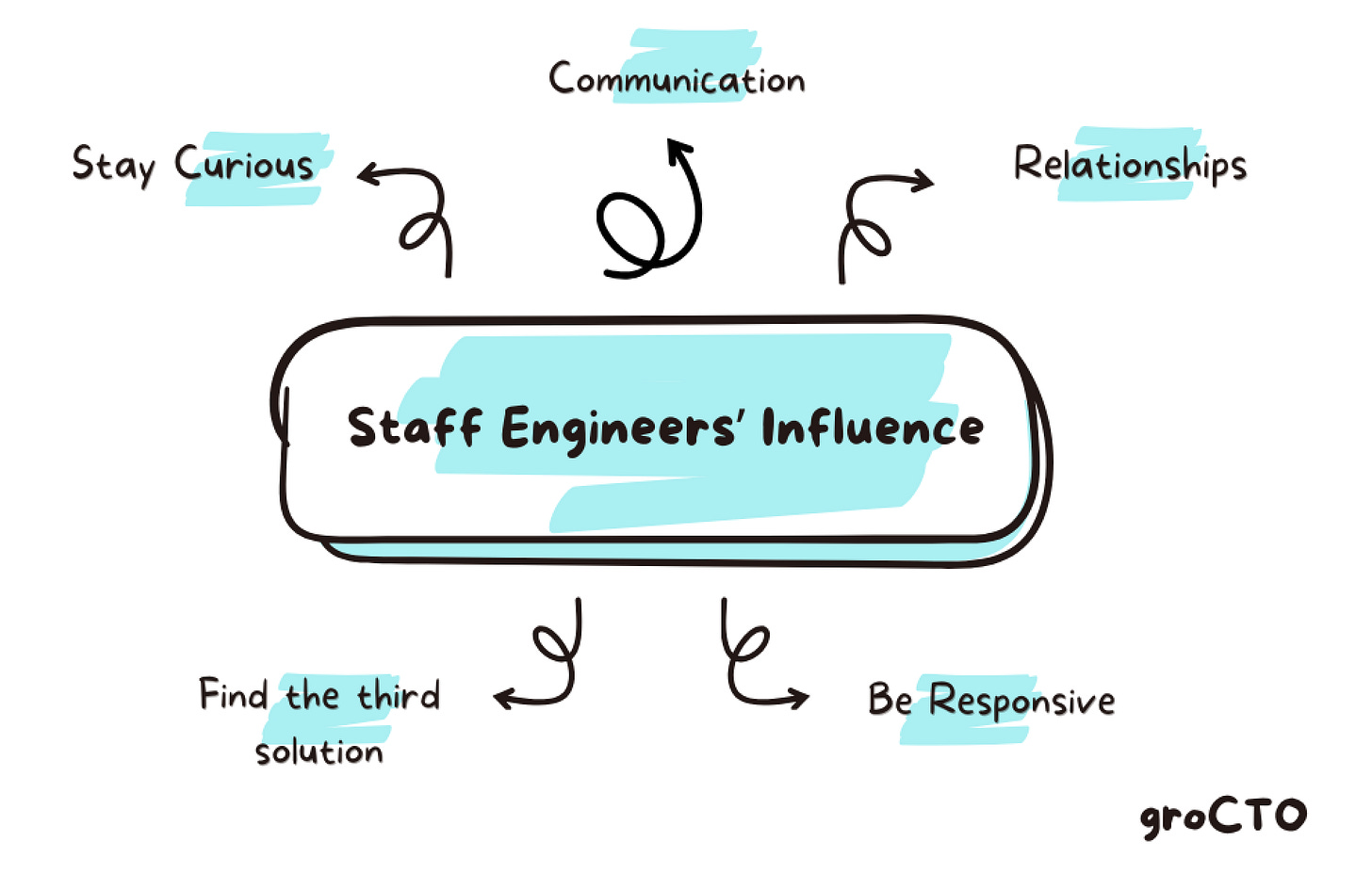

Getting to Staff is about being able to move entire systems of people forward. That happens only through raw technical chops, along with using your influence. Influence earns you trust. Trust earns you autonomy. Autonomy lets you tackle bigger bets, which in turn compounds your impact.

Jordan Cutler shares five operating principles that helped him expand his influence across teams. Daily behaviors that make approvals smoother, debates more productive, and collaboration less painful. These are the kinds of shifts that separate someone who “does great work” from someone seen as a leader others want to work with.

Here’s the playbook:

Five principles to expand influence at Staff level:

Stay curious, learn in public. Ask the “obvious” questions, then teach the answers so the next person doesn’t stumble. Curiosity compounds when it’s shared.

Engineer your communication. Frame your work in terms of others’ goals, write crisply, and time your updates so people feel looped in rather than blindsided.

Relationships. Relationships. People bend over backward for colleagues they respect and enjoy working with. Relationships grease the wheels of execution.

Find the third solution. When stuck between A and B, zoom out. Often the real constraint points to an overlooked option that satisfies both sides.

Be responsive. Reliability builds your reputation. Quick replies unblock others, set a cultural tone, and make you the person people want in their loop.

Influence is built one fast reply, one well-framed pitch, and one relationship at a time.

Other highlights 👇

I Reviewed 50 PRs in the Last 6 Months - Here Are the Most Common Mistakes I Found

After reviewing 50+ PRs in the past six months, Javinpaul and Soma noticed the same issues popping up again and again. None of them are shocking on their own but together, they’re what make code reviews slow, frustrating, and ineffective.

Think of this list as a mirror for your team’s review culture. If you spot these patterns, you’ve got opportunities to tighten things up and ship faster.

Here are the most common offenders:

Paper-thin PR descriptions. “Fixed bug” doesn’t cut it. A PR should tell a story: the problem, the why, the trade-offs.

Mega-PRs. Too big to review properly, impossible to revert safely. Break them down.

Bad naming.

doWork()andtempmight save you keystrokes today, but they cost hours later.Business logic in controllers. Classic rookie mistake. Keep controllers thin; push logic into services.

Missing or shallow tests. Testing getters/setters ≠ real testing. Cover edge cases and failure paths.

Ignoring lint/style rules. Don’t make reviewers be your formatter. Automate it.

Overengineering small features. Abstract factories for a CRUD endpoint? YAGNI.

Breaking backward compatibility. Renaming APIs without deprecation is how production breaks.

Skipping docs. Reviewers shouldn’t have to reverse engineer your intent. Update contracts, READMEs, Swagger.

No observability. If it breaks in prod, will you even know? Add logs, metrics, failure handling.

👉 Bonus: Poor review etiquette. Don’t get defensive, don’t rubber-stamp, and don’t ghost. Reviews are collaboration, not combat. PRs are about culture as much as they are about code quality, clarity, and trust. Clean, thoughtful PRs make teammates want to merge your work and make you the kind of engineer people want to review for.

“It’s Done” Is a Lie Without Shared Understanding

Sprints that die in the “last 10%” have a clarity problem. Fix that, and “done” finally means done.

“It’s Done But Not Really

Every engineering team knows this moment: someone says “It’s done.”

But… it’s not in production. Tests are missing. Edge cases are ignored. And the PM doesn’t even agree on what “done” means. That gap between we shipped code and we delivered value is one of the biggest killers of velocity. Most teams don’t notice until they’re buried in rework. The root problem is lack of shared understanding.

Let’s break it down.

Teams confuse shipping with finishing: closing tickets ≠ solving user problems.

The “last 10%” feels like 90%: edge cases, bugs, feedback loops, redoing “done” work.

Docs are treated as an afterthought instead of the tool that builds alignment upfront.

Matt Watson shares practical steps for getting everyone on the same page before code is written:

✅ Start with problems, not tickets. Clarify the why before Jira.

✅ Define success upfront. Agree on what done means before building.

✅ Write for your future self. Make docs that explain intent, not just implementation.

✅ Embed context, don’t bolt it on. Living docs evolve as the team learns, instead of dying in Notion.

AI Gotchas

AI can write code faster than ever but it can’t explain what problem the code solves. With distributed teams and compressed timelines, context has become the new developer multiplier. The best teams are building shared context that makes finishing possible.

Why Measuring AI Coding Impact Matters

AI coding assistants are spreading quickly through engineering teams, yet LeadDev reports that 82% of organisations aren’t measuring their impact. That gap is more than a detail - it determines whether leaders can make informed decisions about how AI fits into their delivery model.

Adoption numbers alone don’t reveal much. What matters is whether review cycles are shortening, whether velocity gains are consistent across teams, or if uneven usage is distorting productivity baselines. Without that clarity, cost allocations risk being driven by perception rather than proof.

There’s also a long-term dimension. AI alters the texture of delivery in the way that it propagates through pipelines. Patterns in defect density, distribution of PR sizes, reviewer response times, and even refactor frequency can shift subtly. Left unmeasured, these shifts can compound into hidden drag: increased cognitive load for reviewers, erosion of codebase consistency, or brittle modules that raise MTTR.

That’s why measurement isn’t a side activity. It’s the basis for deciding which tools to scale, where adoption needs calibration, and how to capture upside without quietly trading away resilience. Here’s one perspective on how this measurement lens works-

Find Yourself 🌻

That’s it for Today!

Whether you’re innovating on new projects, staying ahead of tech trends, or taking a strategic pause to recharge, may your day be as impactful and inspiring as your leadership.

See you next week(end), Ciao 👋

Credits 🙏

Curators - Diligently curated by our community members Denis & Varun

Featured Authors -

, ,Sponsors - This newsletter is sponsored by Typo AI - Engineering Intelligence Platform for the AI Era.

1) Subscribe — If you aren’t already, consider becoming a groCTO subscriber.

2) Share — Spread the word amongst fellow Engineering Leaders and CTOs! Your referral empowers & builds our groCTO community.